Hadoop Servers Expose Over 5 Petabytes of Data

Improperly configured HDFS-based servers, mostly Hadoop installs, are exposing over five petabytes of information, according to John Matherly, founder of Shodan, a search engine for discovering Internet-connected devices.

The expert says he discovered 4,487 instances of HDFS-based servers available via public IP addresses and without authentication, which in total exposed over 5,120 TB of data.

According to Matherly, 47,820 MongoDB servers exposed only 25 TB of data. To put things in perspective, HDFS servers leak 200 times more data compared to MongoDB servers, which are ten times more prevalent. A report[1] from Binary Edge from 2015 revealed that at the time, Redis, MongoDB, Memcached, and ElasticSearch servers put together exposed a tota of only 1.1 PB of data.

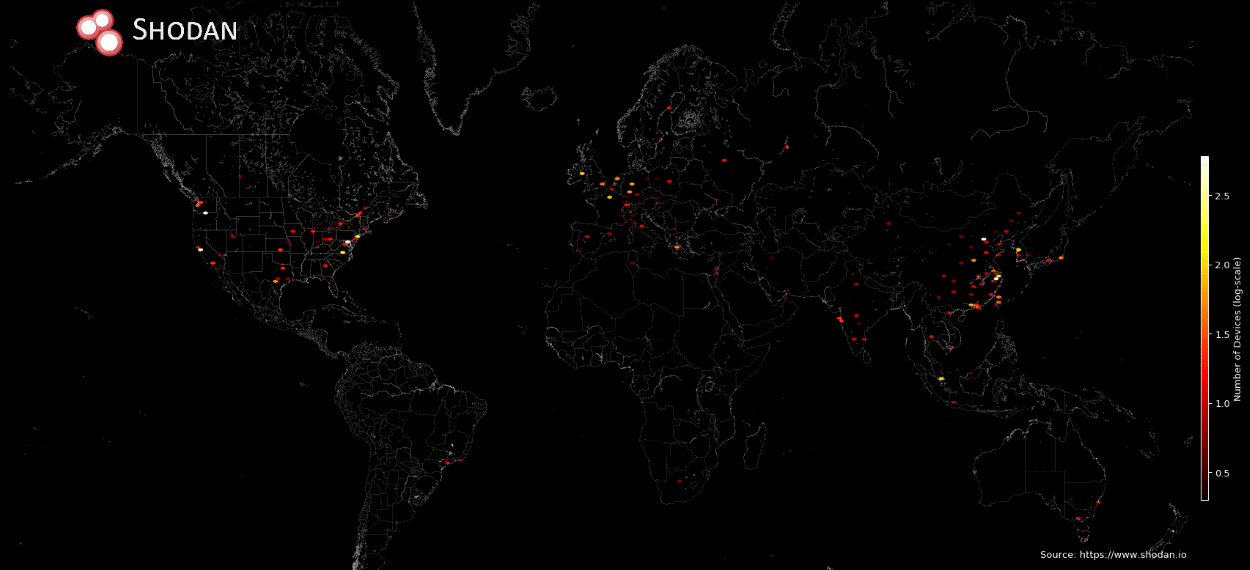

Most HDFS systems are located in the US and China

The countries that exposed the most HDFS instances are by far the US and China, but this should be of no surprise as these two countries host over 50% of all data centers in the world[2].

Top 10 Countries:

+++++++++++++++++++++++++

US 1,900

CN 1,426

DE 129

KR 115

FR 91

SG 86

IE 82

IN 74

TW 66

CA 43

Earlier this year, attackers realized they could take over unprotected servers exposed online, steal their content, and demand a ransom. Attacks first targeted MongoDB, but they soon moved to CouchDB and Hadoop.

Initially[3], 124 Hadoop servers were ransomed, a number which eventually grew to almost 500[4]. According to Matherly, there are still 207 HDFS-based clusters that still feature ransom demands, albeit it's unclear if these are leftovers from the January attacks, or servers are being hijacked even as we speak.

HDFS stands for Hadoop Distributed File System and is a distributed file system designed to run on cloud-based servers. It is the core technology that powers the Apache Hadoop technology, but it's also deployed with custom solutions. HDFS systems are usually found in cloud hosting environments and are used to store and process large quantities of user data.

Instructions on how to configure a Hadoop server to run in secure mode are available here[5]. Matherly has published[6] the necessary steps to reproduce his research.

Comments